Build an AI-based browser phone extension

2023-05-01

Motivation

On March 14, 2023, OpenAI released GPT-4, a transformer-based model that is more reliable, intelligent, and capable of handling nuanced instructions compared to GPT-3.5. GPT-4 has consistently outperformed other Large Language Models (LLMs) in various benchmarks, leading to a surge in diverse AI-powered applications built upon it. Among these applications, the “phone” remains a fundamental human communication need and a core communication service for businesses. The potential for AI to enhance communication efficiency and quality in phone interactions is significant and worth exploring.

Compared to traditional phone applications, we aim to build an AI-driven browser phone extension. This extension is envisioned to leverage AI to assist in every stage of the phone call process, ultimately improving the user’s calling experience.

- Before a call, we want it to help us extract contact information from any third-party webpage, especially complex ones. Crucially, it should provide intelligent analysis of relevant contact data (call recordings, voicemails, SMS, etc.) associated with each identified contact. This would enable us to understand sentiment analysis or potential draft summaries for upcoming calls, and more.

- During a call, the spoken dialogue will be transcribed into text in real-time. Based on this live text conversation, the extension should offer effective intelligent prompts and assistance. It could even intelligently deploy an AI voicebot to participate in the conversation.

- After a call concludes, it will intelligently generate a call sentiment assessment, conversation summary, and to-do list based on the current call information, the conversation transcript, and potentially past interactions with the contact. It will also provide automatic form filling in any CRM page forms.

Once this browser phone extension delivers these functionalities, we will see a seamless integration of phone workflows with relevant platforms. This demonstrates the practical application of AI in optimizing the entire call workflow.

Integrated AI Services and Capabilities

Before implementing this extension, we should clearly define the AI services and capabilities we plan to integrate:

- OpenAI SDK: Provides the chat/completions API for intelligent content generation.

- OpenAI SDK: Provides the audio/transcriptions API for transcribing audio files to text.

- Azure: Additionally offers real-time STT (Speech-to-Text) and TTS (Text-to-Speech) APIs.

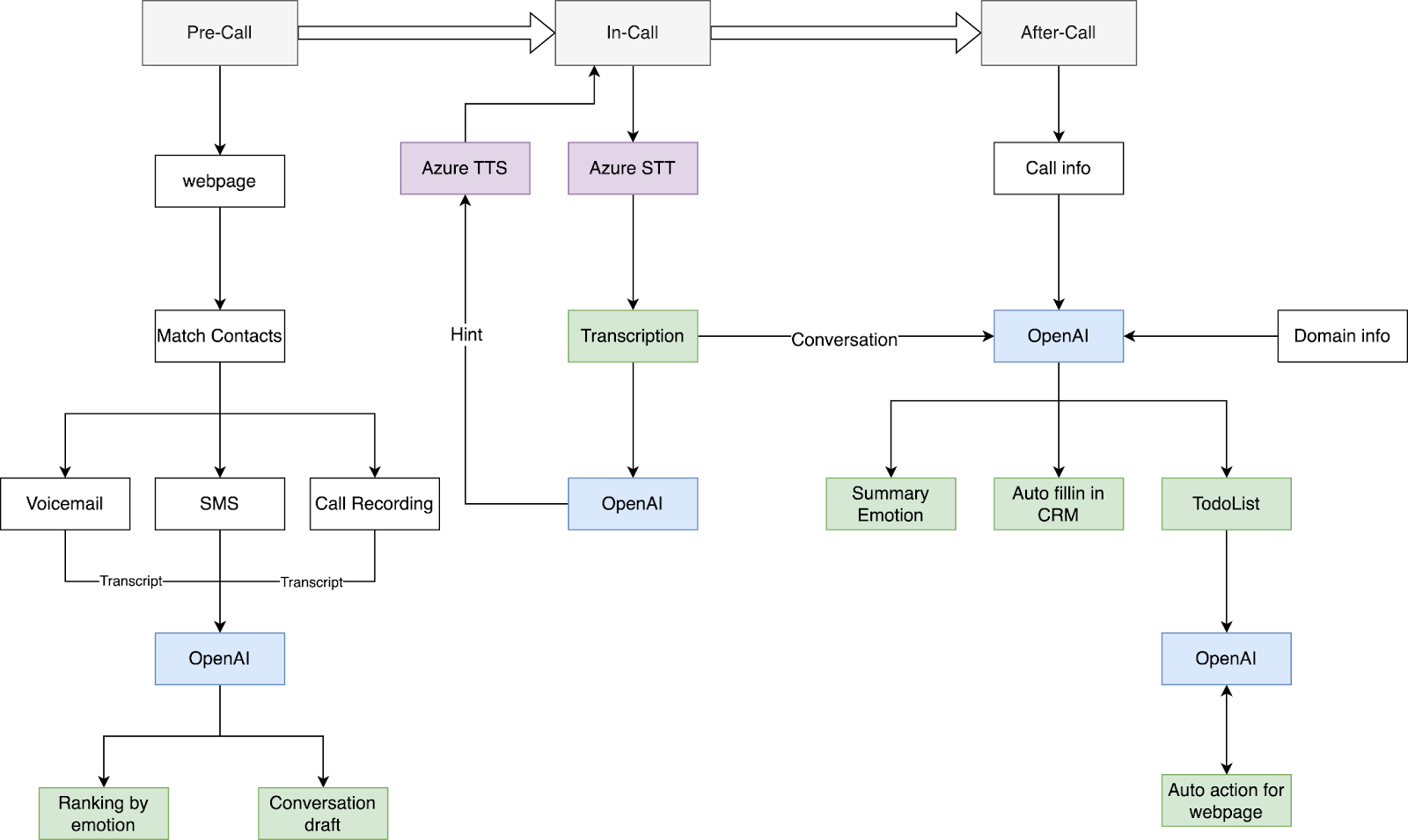

Flowchart

Based on the above three sets of APIs, combined with Browser Extension APIs, let’s design the overall business process:

Implementation

Creating a Basic browser phone extension Project

We can base our project on the open-source browser-extension-boilerplate, which supports building browser extensions for multiple browsers:

- Chrome

- Firefox

- Opera (Chrome Build)

- Edge (Chrome Build)

- Brave

- Safari

We will designate content.js as the JavaScript file that the extension will inject by default into any tabs. We will designate background.js as the browser’s service worker, which will be used to pop up a separate page (client.js) that can function as the Phone app.

Taking the manifest.json configuration of a Chrome MV3 extension as an example:

1 | { |

We should primarily focus on the permissions section:

- tabs and activeTab are mainly used for tab communication and status capture, etc.

- debugger will primarily be used for automating webpage operations.

Implementing Contact Information Extraction from Any Webpage

In content.js, we will implement basic content extraction from webpages:

1 | chrome.runtime.onMessage.addListener((message, sender, sendResponse) => { |

And in client.js, we will implement the corresponding request for webpage content:

1 | async function getActiveTab() { |

We only used the simple API document.body.innerText. A more complete extraction should consider content visibility, iframe content, and many other factors. Due to space limitations, we won’t elaborate further here.

Next, we will submit the extracted content to the OpenAI API to extract contact information:

1 | async function fetchContactsInfo() { |

It’s worth mentioning that here we used a TypeScript interface as the prompt to ensure OpenAI returns a stable JSON data structure.

fetchGPT can be implemented independently. You can install the OpenAI SDK (openai) and set up your own API server.

Intelligent Contact Analysis and Sorting

Once we have obtained contact information, we can match it with contacts in the current phone system and also match the corresponding call recordings, voicemails, and SMS messages. SMS messages are typically text-based, so we can organize this information and submit it directly to OpenAI. However, call recordings and voicemails are audio files, so we will need to use the OpenAI audio/transcriptions API.

1 | async function transcriptVoicemail(files) { |

This way, we can obtain transcriptions of voicemails. Similarly, we can also get transcriptions of call recordings.

Next, we will submit this information to OpenAI to analyze emotion:

1 | async function getEmotion(callRecordings, voiceMails, sms) { |

Once we obtain the emotion analysis for each contact, we can sort these analyses to let users know the potential emotions associated with customer communication for each contact. This could indicate the ease or difficulty of communication, allowing users to plan their call strategies and schedules accordingly.

Real-time STT

Azure provides real-time STT and TTS services. We will use the official SDK, microsoft-cognitiveservices-speech-sdk, to implement STT:

1 | import * as sdk from "microsoft-cognitiveservices-speech-sdk"; |

In this way, using sdk.AudioConfig.fromStreamInput(stream) with our WebRTC stream allows us to achieve real-time STT. Furthermore, by calling this method separately for both the input and output streams of WebRTC, we can obtain real-time text content for both sides of the conversation.

It’s worth noting that microsoft-cognitiveservices-speech-sdk provides a remarkably comprehensive set of input and output stream configurations to accommodate a wide range of STT scenarios.

Intelligent Hints During Calls

In the Azure SDK, the recognized event represents an intermediate, paused result in the ongoing speech recognition. When this event is triggered, we can submit the in-progress conversation text to OpenAI to help provide potential information:

1 | async function getHint(conversation) { |

The hints we obtain will also be displayed in the conversation UI, shown to the user in real-time to provide this intelligent assistance. For example, if the conversation involves discussing discount calculations, the AI will provide the calculated results.

Generating Emotion, Summary, and To-Do List After the Call

We will generate reports such as emotion analysis and summaries by using the complete conversation text as part of the prompt for OpenAI:

1 | async function getReport(conversation) { |

In a similar manner, we will also generate a to-do list for the current conversation:

1 | async function getTodoList(conversation) { |

Intelligently Filling Out Forms

As a fundamental communication service for businesses, phone systems are commonly integrated with third-party CRM platforms. Therefore, intelligently auto-filling CRM forms can efficiently assist collaboration for ToB (business-to-business) users.

To implement intelligent form filling, the first step is to address how to extract and compress DOM information from form pages of any third-party CRM. Here, we reference TaxyAI’s DOM compression model:

By traversing all nodes in the DOM and analyzing their validity, we retain valid attribute information and assign a DI (presumably “DOM Identifier”) tag to each valid node.

1 | const allowedAttributes = [ |

Once we have the compressed DOM information, we can also obtain the webpage labels by analyzing it (through analyzing attributes such as visibility, effective width and height, and operability). Then, we submit this to OpenAI:

1 | async function getFieldValues( |

Once we have fieldValues, we submit the current page and fieldValues to OpenAI, so that it can generate the values for the corresponding input fields:

1 | async function getInputValues(fieldValues, dom) { |

Then, we send these values to the current CRM form page to complete the automatic form filling.

In client.js, we send inputValues:

1 | async function sendInputValues() { |

In content.js, complete the final input filling:

1 | chrome.runtime.onMessage.addListener((message, sender, sendResponse) => { |

We omit the details of handling more complex form types.

Browser Automated To-Do Execution

We leverage the TaxyAI automated execution DOM operation model mentioned earlier, which utilizes OpenAI to control the browser and perform repetitive actions on behalf of the user.

By appending Thought and Action contextual prompts, OpenAI will iteratively and logically deduce the next DOM operation until the current to-do item is fulfilled.

This is the crucial function for formatting the prompt:

1 | function formatPrompt(taskInstructions, previousActions, pageContents) { |

Conclusion

At this point, we have essentially implemented an AI-based browser phone extension that covers the entire phone call workflow.

This article serves as a Proof of Concept (PoC) and aims to outline the general implementation of the relevant processes. However, the application still has many details requiring improvement in areas such as cost, privacy and security, and performance. For instance, cost control might be achieved through techniques like pre-calculating tokens for segmented requests, and privacy concerns could be addressed by employing local Web LLM-based de-sensitization processing, and so on.